Why Synchronous Atomic Composability Matters

Because without it, you cannot guarantee reliable, deterministic and tamper-proof execution of complex, multi-component transactions in a trustless manner in distributed ledger systems.

When grappling with atomic composability, people often fall into two traps: underestimating the importance of synchrony and conflating its two fundamental elements.

First, there's the atomic execution of a transaction, where all its component parts either succeed together or fail together.

Vitalik Buterin memorably described this as "money legos" that fit together.

If the transaction executes successfully, great - but that's not the end of the story.

But there's a second crucial aspect that often gets overlooked - the commitment of those state changes onto the ledger itself.

It's not enough for the transaction components to execute atomically, that execution also has to be finalized immutably on-chain.

Many don't fully grasp the difference and nuances between these two facets of atomic composability and why both are essential for trustless, secure, and robust decentralized applications.

Additionally, the synchrony model is completely overlooked in many cases, which can have a huge effect on the outcome of what appears to be a simple transaction.

In this article, I will tell you exactly how this works, why it matters and why overlooking the importance of Synchronous Atomic Composability will lead to a path full of complexities and pitfalls.

Before we dive in:

I write regularly and cover everything crypto, tech, and some of my musings.

With social media buzzing with crypto, this helps me keep my thoughts in one place.

Interested? Head over to my Substack and join the conversation:

Atomic Composability = Atomic Execution + Atomic Commitment

First of all, what does the term 'atomic' mean?

The term "atomic" draws an analogy from physics, where an atom was once thought to be the smallest, indivisible unit of matter. Similarly, in computer science, an atomic operation is the smallest, indivisible unit of execution that cannot be broken down further from the perspective of the system.

Atomic composability builds upon this concept of atomicity and applies it to the composition of multiple operations or instructions within a single transaction.

Atomic composability in blockchain systems consists of two key components: atomic execution and atomic commitment.

First, there's the atomic execution of a transaction, which refers to the all-or-nothing nature of transaction processing. When a transaction is submitted, either all of its operations are executed successfully, or none of them are.

Atomic commitment, on the other hand, deals with the finalization of state changes on the ledger..

It ensures that once a transaction has been executed, all its effects are permanently and irreversibly written to the ledger.

This step ensures that the updated state is correctly reflected and available for subsequent transactions.

This is crucial for maintaining consistency across a distributed system and preventing partial updates that could lead to inconsistent states.

In monolithic blockchain systems like Ethereum Layer 1 (L1) atomic commitment is generally guaranteed due to how consensus and execution work. When a validator receives a block of transactions, it locks the relevant state, executes the transactions, and then commits the changes.

The process typically follows these steps:

A transaction arrives for execution.

The validator locks the required state.

The state is read and changes are computed.

The updated state is written back.

This process naturally ensures atomic commitment, as the validator has full control over all the state changes.

Even in cases of failed transactions, such as on Ethereum where users pay for failed computations, atomic commitment is maintained. The validator locks the user's account balance, deducts the gas fee, and logs the failure - all as part of a single, atomic operation.

Atomic execution and atomic commitment are often conflated - in monolithic designs, you essentially get atomic commitment for free.

The importance of atomic commitment becomes evident when considering complex, multi-step transactions that span across different shards, chains, or Layer 2 (L2) solutions.

Without both, you can't truly call it atomic composability.

Synchronous vs. Asynchronous Atomic Commitment

Atomic commitment can be implemented in two primary ways: synchronously or asynchronously.

Synchronous atomic commitment requires all participants of a transaction to be available and responsive during both execution and commitment processes, allowing for real-time coordination and decision-making. This approach provides stronger guarantees but can be challenging to implement in large-scale distributed systems due to the effects of network latency and the potential for node failures or dishonest actors.

Asynchronous atomic commitment, on the other hand, allows participants to commit their parts of the transaction independently, often relying on message-passing through an intermediary layer. While this approach can be more scalable, it introduces complexities in handling failures, timeouts, and ensuring consistency across all participants.

As a result, atomic execution is relatively easier to achieve than atomic commitment in sharded or L2 systems.

The main challenge lies in coordinating the separate validator sets to ensure they all agree on the action to take and adhere to that agreement before moving on.

Atomic Commitment in Sharded Systems and L2 Scaling Solutions

Monolithic blockchains inherently provide atomic commitment. However, this guarantee falls apart in the case of sharded networks or L2 solutions, which must coordinate transactions in a complex environment.

Depending on your sharding architecture, your state model, how you handle locking in that state model, and various other factors, you may achieve atomic execution. However, you might not attain atomic commitment.

I believe one of the key reasons Ethereum moved away from sharding was the challenge of ensuring atomic commitment across different shards.

Sharding divides the system into smaller, more manageable pieces, each with its own state and validator set.

The problem arises when you split state across multiple validator sets. This division introduces complexity in achieving atomic commitment across different shards.

In a sharded network, a transaction might need to interact with states managed by distinct validator sets. For atomic commitment, these validator sets must coordinate closely.

They need to ensure that all involved parties agree on the state changes before committing them. This coordination is crucial to prevent inconsistencies and ensure that all state changes are synchronized across the relevant shards.

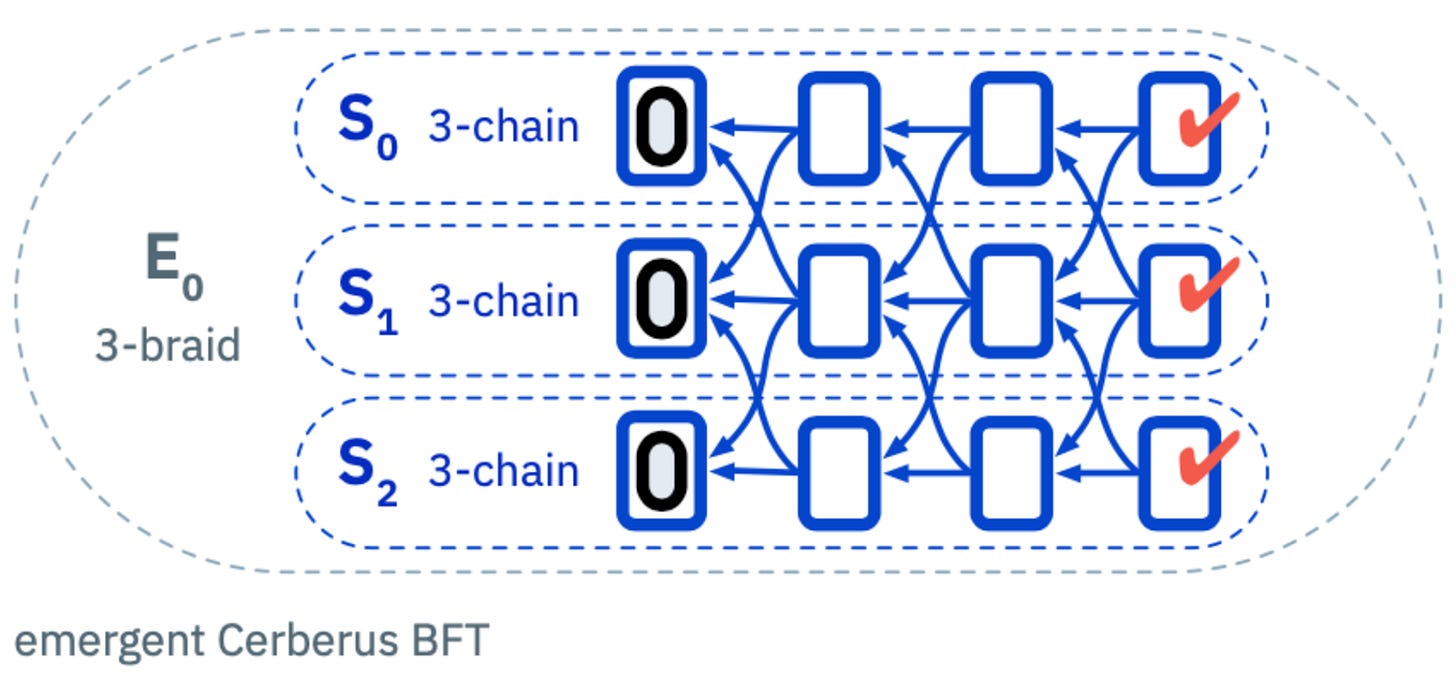

For example, Cerberus consensus protocol solves this problem in an elegant manner. It introduces the concept of 'Braiding, where the required validator sets form a temporary unified validator set for a specific transaction, enabling efficient cross-shard communication and consensus.

When a transaction involves multiple shards, the protocol dynamically creates a "braid" of the relevant validator sets, allowing them to reach consensus specifically for that transaction.

This quasi-synchronous approach ensures that once a consensus is reached, each validator set commits the state changes without violating the agreed-upon state locks. This guarantees that no further changes are made to the state until the initial transaction's state changes are committed.

Once the temporary validator set disbands, they're bound to honor the agreed changes before making any further modifications to that state with other transactions.

These temporary validator sets as described by Cerberus are very efficient, and any distinct validator set may be involved in 1000s of them at any moment in time, thus processing 1000s of transactions in parallel.

This coordination is very difficult to ensure on Layer 2 systems, just as it is between different Layer 1 systems.

Why L2 Scaling Solutions Cannot Guarantee Atomic Commitment

The issue with L2's is that they operate with asynchronous commitment and there is no standard for this communication.

The only ‘standard’ method for L2s to communicate is through the L1 blockchain they reside upon, which acts as a messaging layer.

However, this approach introduces several problems that make atomic commitment nearly impossible.

When an L2 needs to interact with another L2, it can't directly communicate with the validators of that other L2. Instead, it has to go through this convoluted process:

The first L2 executes its part of the transaction and makes state changes.

It then rolls up or enshrines the output on the L1.

The second L2 has to constantly check the L1 for these "messages".

When it spots a relevant message, it reads it, interprets the requested state changes, and then performs its own execution.

Finally, it writes its results back to the L1.

This process is entirely asynchronous.

There's not even synchronous or atomic execution, let alone commitment, not even semi-synchronous.

The first L2 has no idea if the second L2 will come to the same conclusions about the transaction or if it will even succeed on their end.

Now, let's say the transaction fails on the second L2. They write a reject message back to the L1. The first L2 sees this rejection and realizes it needs to undo its changes.

But what if something has happened in the meantime that prevents this rollback?

You might think, "Well, just keep a lock on the state until you see an accept or reject." But this lock could last for 40 seconds, 50 seconds, or even minutes. It could potentially never resolve if the other L2 is down or faulty. So you implement a timeout, but that introduces its own problems. What if you timeout and undo your changes, but then the other L2 writes back saying it was actually good soon after?

Now you've undone something you shouldn't have, and you might not be able to reapply the change because other things have changed in the meantime.

These issues can lead to hanging states, where the system is unable to progress due to unresolved transactions.

It can also necessitate complex state maintenance operations, which are prone to errors and inconsistencies.

In contrast, monolithic L1 systems like Ethereum don't face these issues because all state changes are processed, executed and committed in a single, atomic operation.

The lack of this capability in L2 scaling solutions means that as interactions between L2s become more complex, it just turns into a real mess, and that has some real consequences.

The Repercussions of Non-Synchronous Commitment

Consider a scenario where a developer writes code expecting a particular result within a specific timeframe, but it doesn't happen. Or they assume a piece of state will have a certain value, because it has a million times before, but now it doesn't.

The transaction executes on the first L1 and everything seems fine, but the second L1 rejects it because of that assumed value.

Suddenly, they're faced with hanging states.

The developer did their part, so why isn't everything fine?

The answer: no atomic commitment.

In Web2 development, many of these concerns are handled behind the scenes by databases or APIs.

But in the blockchain world aka Web3, especially with L2 solutions, developers are suddenly faced with complexities they may not be prepared for or even realize they need to be.

Now the developer needs to unravel the transaction they just made, handle all sorts of edge cases, and implement intricate error-handling mechanisms. It's enough to make many developers throw up their hands and say, "I'm off to JavaScript on Web2!"

The problem becomes even more pronounced when dealing with different types of rollups and chains.

Each L2 solution might have its own execution environment, consensus mechanism, and set of primitives. There's no guarantee that all L2 developers will implement the same standards for error handling or cross-chain communication.

Some proposed solutions, like aggregation layers, attempt to unify and streamline transactions across multiple blockchain networks. However, these are never truly atomic. Both sides are always waiting for a message from the other side, and there are countless reasons why that message might be delayed or never arrive.

The only feasible safe workaround in the current L2 architectures is to accept that transaction finality times are unbounded. An L2 might execute its part of a transaction, lock the relevant state, and then wait for confirmations from all other participants.

But what if one of those participants goes offline? How long should the system wait before timing out? And what if it times out just before the final confirmation arrives?

These scenarios lead to a constant balancing act between waiting for confirmations and risking timeouts. The time to finality could stretch from minutes to hours, introducing significant uncertainty into the system.

At the heart of all these issues lies a fundamental problem: your communication mechanism is really slow.

And these complexities of asynchronous models are the core reason why Ethereum moved away from execution sharding.

However, now with a rollup-centric roadmap, they are looking down the barrel of the same problems they ran away from in the first place.

The "Train-and-Hotel Problem" of Asynchronous Architectures

So let’s consider some real-life DeFi scenarios to really understand the extent of these complex design choices.

Imagine a scenario where execution happens sequentially: you execute, send your result to another node, they execute and send their result to someone else.

But what if one node goes down in the middle of this process?

From a user experience perspective, consider the "Train-and-Hotel Problem" Vitalik Buterin once discussed in his blogs about sharding.

You want to book a hotel, a train ticket, and a car for your vacation, but you need all three or nothing at all. This requires both atomic execution and atomic commitment. In asynchronous architectures, this can become incredibly messy.

For example, in what order do you process these bookings? Is the hotel more important than the car or the flight? If you book the hotel and flight, but the car isn't available, the transaction fails. Now you need to undo the hotel and flight bookings. But what if those systems had already committed the bookings? The complexity ripples out, affecting multiple real-world systems.

In DeFi scenarios, the problems can be even more acute.

Consider a situation where you want to purchase a limited NFT on Base that you discovered on Farcaster, but your funds are on Arbitrum, similar to what @ellierdavidson wanted to do here.

In an atomic system, you could ensure that the transaction only proceeds if the NFT is available. If the NFT has already been minted, the transaction would fail cleanly without any assets being transferred.

However, in an asynchronous system, you might end up in a problematic situation if the transaction does not proceed as intended:

You'd initiate the transfer of funds from Arbitrum to Base.

Then you'd attempt to purchase the NFT on Base, but it fails because the NFT has already been minted.

Now your funds are stuck on Base, where you didn't originally want them to be.

You'd need to manually transfer the funds back to Arbitrum, or have a smart contract do it for you.

But while all this was happening, the value of the funds might have fluctuated.

You end up with fewer funds on Arbitrum than you started with, plus you've paid transaction fees twice.

If the return transaction fails, you might need to try again and again, paying more fees each time.

Each of these additional transactions comes with fees and potential further losses due to price movements.

None of the proposed solutions - UX improvements, bridges, or shared sequencing - can fully solve the fundamental issues because the root problem is the slow, asynchronous nature of the communication itself.

And this is exactly why execution sharding was crossed out of Ethereum’s scalability roadmap.

But look at what has replaced it: an even more complex set of problems.

The atomic approach sidesteps all these issues: It's a simple "it either happened or it didn't" scenario, avoiding the need for complex rollback mechanisms and protecting users from unexpected partial executions.

Synchronous Atomic Composability Really Matters!

The solution to all the complexities that result from asynchronous models lies in a system that guarantees both atomic execution and atomic commitment - the two key components of true atomic composability.

A system where transactions either execute and commit completely, or not at all. No partial state, no hung transactions, no unexpected outcomes.

This is the core aim of Cassandra test network: to create a model that guarantees atomic commitment, avoiding the pitfalls and complexities of asynchronous systems, while providing deterministic safety and reliable performance.

It’s an elegant solution that paves the way forward without adding unnecessary complications.

Stay tuned for a series soon detailing how Cassandra solves these problems by building upon a simple, time-tested model: the Nakamoto consensus protocol of Bitcoin.

Stay tuned for more insights and follow my Substack to not miss a thing ! 😉